A summary of the list of recommendations on the implementation of the OBBBA in Colorado regarding public benefits systems and work requirements.

Recent articles

CCLP testifies in support of Colorado’s AI Sunshine Act

Charles Brennan provided testimony in support of Senate Bill 25B-004, Increase Transparency for Algorithmic Systems, during the 2025 Special Session. CCLP is in support of SB25B-004.

Coloradans launch 2026 ballot push for graduated state income tax

New ballot measure proposals would cut taxes for 98 percent of Coloradans, raise revenue to address budget crisis.

CCLP statement on the executive order and Colorado’s endless budget catastrophe

Coloradans deserve better than the artificial budget crisis that led to today's crippling cuts by Governor Jared Polis.

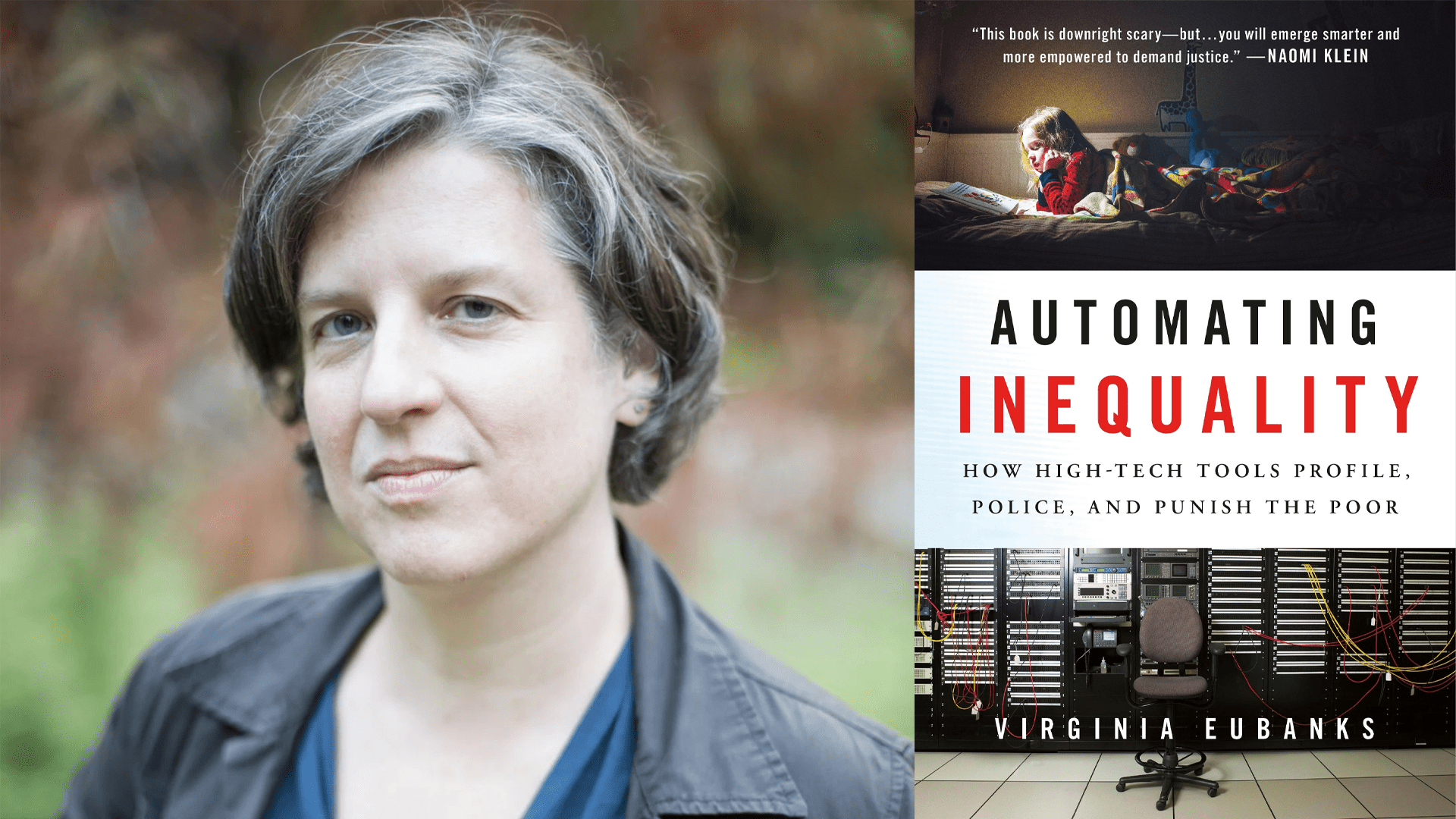

Poverty, technology and the stories we tell

In advance of Communities Against Poverty 2024 this Thursday (December 12), we interviewed our keynote speaker Dr. Virginia Eubanks, political scientist, Associate Professor at the Rockefeller College of Public Affairs and Policy at SUNY Albany, and author of the book Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor. Dr. Eubanks’s work focuses on the intersection of social justice and technology, with particular emphasis on how the implementation of technology often leads to harms for people experiencing poverty.

CCLP: In your book you describe how close to home the difficulties of administrative burdens became, following your partner’s injuries and recovery, but you’d been writing about the intersection of technology and poverty long before that point. Administrative burdens of all types remain an underappreciated area of anti-poverty policy. What first drew you to this subject?

Dr. Virginia Eubanks: I always give credit for finding my way to this topic to Dorothy Allison, a young mom on public assistance who I worked with as part of a participatory research team back in the late 1990s. We were working together to design technology tools for a community of women living, working, and playing at the YWCA of Troy-Cohoes. The operating paradigm at the time was that the key issue of technology and social justice was access — that is, the fear was that poor and working people would not be included in the internet revolution.

But women in the YWCA community told different stories. They didn’t lack access to communications technologies: they came in contact with high-tech surveillance in nearly every aspect of their day-to-day lives. The most salient and poignant stories they told were often about interacting with computer systems in the public assistance office.

One day, Dorothy and I were sitting in a tech lab we had helped design at the YW, talking about her EBT card (a new debit-like card the state was using to distribute benefits). While she had some good things to say in terms of convenience and lowering stigma, she also told me that her caseworker was using the card to track her purchases and give her financial advice. She was incredibly disturbed by this invasive scrutiny, and I remember her laughing at the look of shock on my face. She said, “You should pay attention to what happens to us. They’re coming for you next.” And for the last twenty-five years, Dorothy’s voice has been in my head, guiding the questions I ask.

CCLP: In Colorado we’ve seen first-hand the negative effects of broken public benefit system software, with many Coloradans being dropped from Medicaid in particular, despite maintaining eligibility. How can policy advocates and policymakers ensure that the development and deployment of technology will be more accountable to the communities who must interact with those systems?

VE: This is the billion-dollar question. I tell the long and sordid story of public assistance in the United States, going all the way back to 1819. I don’t think we can understand why the technology we develop so often ends up punitive and dehumanizing until we understand this history.

Until there is a broad-based and powerful movement to give poor and working-class Americans power in the policymaking that most impacts their lives — we could look to history for examples like the Community Action Programs of the War on Poverty or the national Welfare Rights Movement — I don’t think there will be major changes to our current public assistance system. The deep social programming of the administrative technology we develop is influenced by the stories we tell about poverty in the country, and generally amplifies the problems already inherent in our policy: its focus on moral surveillance, assumption of scarcity, punitiveness, and administrative burden.

I don’t believe the technology will change until we change the stories we tell about care, inequality, and the state’s duty to protect its citizens from economic shocks. The cure for bad data may be better storytelling.

CCLP: What is a policy change you would like to see states put into effect, to protect low-income communities from the harms of the automated systems you describe in your book? (Either in terms of new legislation addressing these harms head-on, or perhaps in terms of factors to consider at the regulatory agency/rule-making level?)

VE: The change I’m thinking about the most these days is the need to harden systems against misuse by political actors. With the global rise in ethno-nationalist populism and authoritarianism, it would be deeply naive to assume that the systems we build with good intentions won’t be used for more nefarious purposes. The DACA list developed by the Obama administration to protect Dreamers could just as easily be used as an agenda for deportation.

danah boyd once wrote a lovely essay about designing public technology like a hacker — that is, from the assumption that the system will be misused. I think those of us who are involved in designing public technology need to start from this supposition as well — that the tools we help build may well eventually be used by totalitarians.

CCLP: In the years since you first started sounding the alarms about this subject, public attention to AI-driven decision-making has ramped up dramatically. Generative AI in particular has been applied across many industries, often in a haphazard way that leads to negative consequences. But awareness of the risks and limitations has also risen. How do you see the evolution of this technology (and society’s response to it) impacting your thesis in the time since your book came out? Any positive trends to speak of?

VE: While more sophisticated AI certainly may pose new problems in the future, the focus in my research and writing has always been on what is actually happening, right now, in peoples’ everyday lives. I become concerned when scholars radically reshape our research agendas each time a sexy new technology is launched. This abstract, future-orientated approach leaves us unable (or unwilling?) to address the real-life, concrete impacts of technologies.